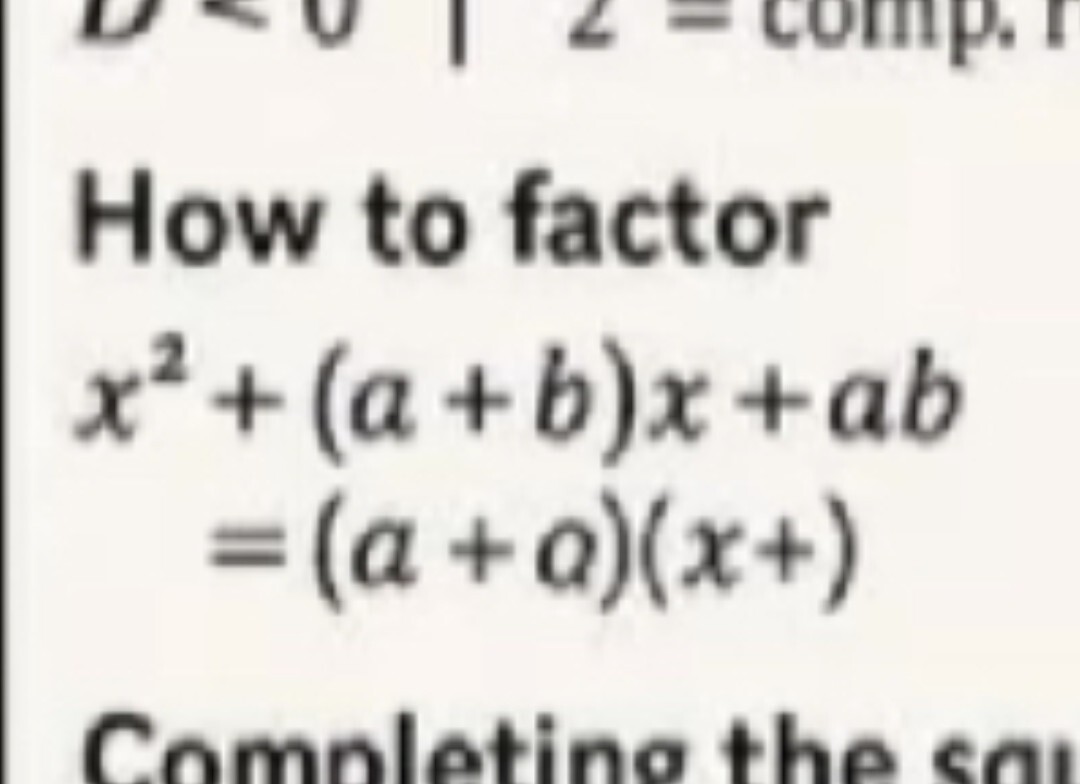

The more I look at it, the more I feel like my brain is being hacked.

This a great visualization of what LLMs are about. The purpose of a LLM is to produce something that looks right. The way this math sheet looks great at first look, but the actual content is just garbage, same do LLMs create output that sounds right and it doesn’t really matter if it is. And improving LLMs is not about making the answers more reliable, it’s about making them sound even more convincing.

If we improve LLMs they will be able to remove that “+” at the end, so you won’t know it’s wrong until second glance.

If we improve LLMs they will be able to remove that “+” at the end, so you won’t know it’s wrong until second glance.But the worst thing is that some of the things are correct:

a²-b²=(a-b)(a+b)

a²+b²=c²And these are common knowledge. This makes it even worse, since you have some correct parts to “prove” the rest is also correct.

Did you know that a²-b² = (a+b)(a-b)?

Because this ai really wants you to know thatI also usually really want people to know that, but it’s not “completing the square” or “wffence of txsquare.”

Well it can’t decide what the solution is:

a²-b² = (a+b)(-b)That is the offense of a texas square according to those flawless notes.

tan θ =

For a second I really thought all of high school math had left my brain.

TRICKS

343 shares

Facebook is so screwed.

Like, I didn’t think anything could kill the Behemoth, then Zuckerberg says hold my beer.